Introduction

Containerization ushered in a new way to run workloads both on-prem and in the cloud securely and efficiently. By leveraging CGroups and Namespaces in the Linux kernel, applications can run isolated from each other in a secure and controlled manner. These applications share the same kernel and machine hardware. While CGroups and Namespaces are a powerful way of defining isolation between applications, faults have been found that allow breaking out of their CGroups jail. Additional measures such as SELinux can assist with keeping applications inside their container, but sometimes your application or workload needs more isolation than CGroups, Namespaces, and SELinux can provide.

There are multiple proposed solutions to this isolation challenge including Amazon Firecracker, gVisor, and Kata Containers. Google’s gVisor takes one approach to solve this problem and leverages a guest kernel in user space to sandbox containerized applications. Because gVisor is re-implementing all the Linux kernel syscalls there can be issues with compatibility and not all syscalls have been fully implemented. Alternatively, both Firecracker and Kata Containers leverage a tried and true technology, virtualization to create a complete sandbox around your containerized application. While Kata can work on a stand-alone machine, by directly integrating with containerd or CRI-O, it is mainly used as a part of a Kubernetes cluster.

The use of Virtualization may seem like abandoning the last 5+ years of progress with containers, but this is not really the case. Kata Containers (Kata) creates a virtual machine instance leveraging one of the four supported hypervisors however they are not your traditional virtual machines. Kata Containers creates a VM using a highly optimized Linux guest kernel designed for running containerized workloads and has a highly optimized boot path for quick start time. Boot times for these virtual machine instances can be under 5 seconds as can be seen in the kernel boot log:

[ 0.000000] Linux version 4.18.0-305.10.2.el8_4.x86_64 ([email protected]) (gcc version 8.4.1 20200928 (Red Hat 8.4.1-1) (GCC)) #1 SMP Mon Jul 12 04:43:18 EDT 2021

[ 0.000000] Command line: tsc=reliable no_timer_check rcupdate.rcu_expedited=1 i8042.direct=1 i8042.dumbkbd=1 i8042.nopnp=1 i8042.noaux=1 noreplace-smp reboot=k console=hvc0 console=hvc1 cryptomgr.notests net.ifnames=0 pci=lastbus=0 quiet panic=1 nr_cpus=6 scsi_mod.scan=none

[ 0.000000] x86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers'

[ 0.000000] x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers'

[ 0.000000] x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers'

...

[ 1.397176] NET: Registered protocol family 40

[ 1.481833] virtiofs virtio4: virtio_fs_setup_dax: No cache capability

[ 1.528980] scsi host0: Virtio SCSI HBA

[ 1.705952] printk: console [hvc0] enabled

[ 1.999535] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 2.144673] cgroup: cgroup: disabling cgroup2 socket matching due to net_prio or net_cls activation

[ 2.656168] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

NOTE: Depending on your specific hardware, startup times may be faster or slower.

We will be taking a closer look at Kata Containers and Red Hat OpenShift’s implementation of it called OpenShift sandboxed containers Operator in this post. We will not be delving into the design or architecture of Kata Containers, itself. If you are interested in seeing what is behind the curtain of Kata Containers be sure to see the Kata Containers Architecture document on Github.

Deploying Kata Containers in OpenShift couldn’t be easier. Let’s get started.

Prerequisites

- Working OpenShift Bare Metal Cluster

- Administrator level privileges in the cluster

- The OpenShift oc command-line tool

We will be installing the OpenShift sandboxed containers Operator onto an existing OpenShift cluster. You will need to ensure that the cluster is a Bare Metal cluster to have support for the virtualization extensions that are required to make Kata Containers work. You can also run OpenShift sandboxed containers using nested virtualization, but this is NOT a supported configuration and is left to the reader on how to implement nested virtualization should you wish to try.

Installing the OpenShift sandboxed containers Operator

As with most operators in OpenShift, the OpenShift sandboxed containers Operator can be installed from the UI or the command line. We will use the UI in this post, but if you prefer to install from the command line see the official documentation here: Deploying OpenShift sandboxed containers operator using the cli.

- Start by logging in to your cluster with Cluster Admin level permissions.

- Select “Operators” and then “OperatorHub” from the left-hand menu

- Search for “sandbox” and select the OpenShift sandboxed containers Operator

- Select “Install” and select all defaults

- Wait for the operator to complete the install

- Select the installed Operator

- Select “Create instance”

- Leave all config options as default and click Create

At this point, the operator will properly configure your cluster for Kata Containers. As a part of the install process, all worker nodes will be rebooted to install the proper features in your cluster.

Deploying a Test Application

Once the OpenShift sandboxed containers operator has been installed in your cluster we can start using it. To show how this can work, we will take a well-known example Kubernetes example application called “GuestBook-go” and run it in our cluster. We can then modify it to isolate one or all of the workloads into the Kata Container runtime.

We will be using the code from https://github.com/xphyr/guestbook-go. This is a purpose-built fork of the Kubernetes example code https://github.com/kubernetes/examples/tree/master/guestbook-go. The code in the xphyr repository has been updated to allow building with a modern release of Go and changing the JSON deployment files to YAML.

To start clone the GitHub repo repository and then log into your OpenShift cluster:

$ git clone https://github.com/xphyr/guestbook-go

$ oc login -u <username>

We will now create a new project called “guestbook” and then create a Redis instance in your cluster. The file redis-deployment.yaml contains all the k8s objects required to create a highly available Redis cluster including one Primary server and two Replica instances along with the required services to access the Redis instance.

$ oc new-project guestbook

Now using project "guestbook" on server

$ oc create -f redis-deployment.yaml

deployment.apps/redis-primary created

service/redis-primary created

deployment.apps/redis-replica created

service/redis-replica created

Validate that the Redis containers are up and running.

$ oc get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

redis-primary 1/1 1 1 92s

redis-replica 2/2 2 2 92s

Ensure that both the redis-primary and redis-replicas are in a ready state before continuing.

Now let’s deploy the guestbook-go application. We will start by deploying a traditional container.

$ oc create -f guestbook-deployment.yaml

deployment.apps/guestbook created

$ oc create -f guestbook-route.yaml

service/guestbook created

route.route.openshift.io/guestbook created

$ oc get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

guestbook 3/3 3 3 62s

redis-primary 1/1 1 1 4m58s

redis-replica 2/2 2 2 4m58s

Ensure that all three guestbook pods are running before continuing.

We also created an OpenShift Route so we can easily access our application. Get the route using the oc get route command

$ oc get route

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

guestbook guestbook-guestbook.apps.ocp48.xphyrlab.net guestbook <all> None

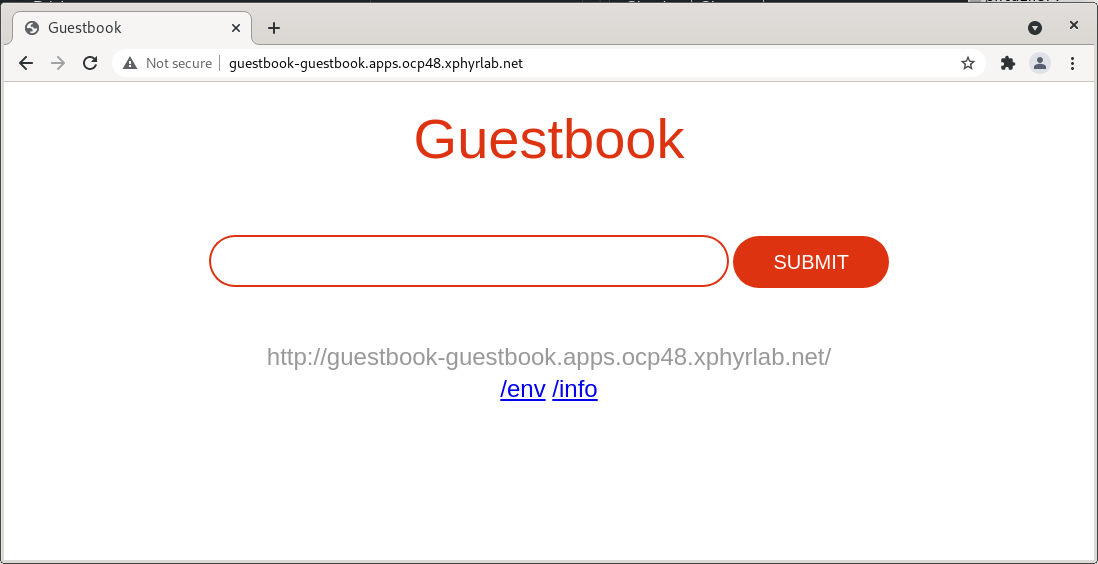

Copy the hostname output from your command and paste it into a web browser. You should get something like this:

To test that the application is fully working, enter values and click “SUBMIT”. The web page should update and display each of the entries you submit on the page.

Validate we are running in a “traditional” container

The next few commands will validate that we are running a traditional container. Using the oc exec command we will run a few commands within the container, and take note of the output. We will then compare the output when we run the commands in a Kata container later in this post.

$ oc get pods

NAME READY STATUS RESTARTS AGE

guestbook-67d7cb5d95-ddwph 1/1 Running 0 7m23s

guestbook-67d7cb5d95-dr6ff 1/1 Running 0 7m34s

guestbook-67d7cb5d95-vgrvw 1/1 Running 0 7m41s

redis-primary-5dc958f76d-vsd2l 1/1 Running 0 27m

redis-replica-df9f8bd7d-2zpt7 1/1 Running 0 27m

redis-replica-df9f8bd7d-7b6rf 1/1 Running 0 27m

$ oc exec -it pod/guestbook-67d7cb5d95-ddwph -- /bin/sh

NOTE: Be sure to update the oc exec command with a pod from your output

Within the pod, run the following commands:

$ cat /proc/cpuinfo | grep processor

processor : 0

processor : 1

processor : 2

processor : 3

$ dmesg

dmesg: read kernel buffer failed: Permission denied

$ free

total used free shared buff/cache available

Mem: 12280136 4343092 2021928 382100 5915116 7253904

Swap: 0 0 0

So with the above output, you will note that we are unable to see the kernel boot messages, (this will be important later) and that the node the container is running on has 4 CPUs and 12Gb of RAM.

Migrate to Kata Containers

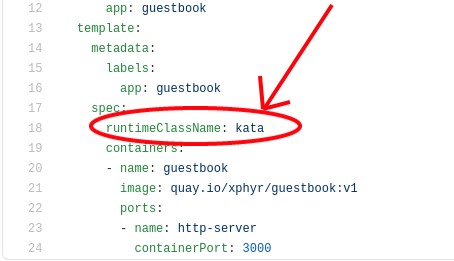

To use Kata Containers to run our container in a sandboxed environment we need to only make one small change to our deployment. Run oc edit deployment/guestbook and add runtimeClassName: kata to the spec.template.spec section of your YAML:

Your updated deployment should look like this:

Quit the editor and check to ensure that you are still able to access the application. Go back to your web browser where you have the Guestbook Application open and add a few more entries to the guestbook. You will see that the old entries are still there and that you can add new ones as well. The Guestbook application is now running in a sandboxed VM within OpenShift with NO changes to your code or your container image.

While we are using a Kubernetes Deployment type for deploying our application, changing the spec.runtimeClassName to kata can be done for any container definition, be it Pod, ReplicaSet, Job, etc.

Validate we are running a Kata container

The next few commands will validate that we are running a Kata container. We will be using the oc exec command to run a few commands within the container, we will then compare the output from when we ran these commands in the Validate we are running in a “traditional” container section.

$ oc get pods

NAME READY STATUS RESTARTS AGE

guestbook-67d7cb5d95-ddwph 1/1 Running 0 7m23s

guestbook-67d7cb5d95-dr6ff 1/1 Running 0 7m34s

guestbook-67d7cb5d95-vgrvw 1/1 Running 0 7m41s

redis-primary-5dc958f76d-vsd2l 1/1 Running 0 27m

redis-replica-df9f8bd7d-2zpt7 1/1 Running 0 27m

redis-replica-df9f8bd7d-7b6rf 1/1 Running 0 27m

$ oc exec -it pod/guestbook-67d7cb5d95-ddwph -- /bin/sh

NOTE: Be sure to update the oc exec command with a pod from your output

Within the pod, run the following commands:

$ cat /proc/cpuinfo | grep processor

processor : 0

$ dmesg

[ 0.000000] Linux version 4.18.0-305.10.2.el8_4.x86_64 ([email protected]) (gcc version 8.4.1 20200928 (Red Hat 8.4.1-1) (GCC)) #1 SMP Mon Jul 12 04:43:18 EDT 2021

[ 0.000000] Command line: tsc=reliable no_timer_check rcupdate.rcu_expedited=1 i8042.direct=1 i8042.dumbkbd=1 i8042.nopnp=1 i8042.noaux=1 noreplace-smp reboot=k console=hvc0 console=hvc1 cryptomgr.notests net.ifnames=0 pci=lastbus=0 quiet panic=1 nr_cpus=6 scsi_mod.scan=none

[ 0.000000] x86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers'

[ 0.000000] x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers'

[ 0.000000] x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers'

...

[ 1.397176] NET: Registered protocol family 40

[ 1.481833] virtiofs virtio4: virtio_fs_setup_dax: No cache capability

[ 1.528980] scsi host0: Virtio SCSI HBA

[ 1.705952] printk: console [hvc0] enabled

[ 1.999535] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 2.144673] cgroup: cgroup: disabling cgroup2 socket matching due to net_prio or net_cls activation

[ 2.656168] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

$ free

total used free shared buff/cache available

Mem: 1958252 32808 1841232 59996 84212 1778244

Swap: 0 0 0

Note that this time around, there is only 1 processor available, we have only 2GB of RAM and we were able to get the entire kernel boot log. Note that the Kata Container kernel took just over 2.5 seconds to start up. You are now successfully running your containerized workload in a sandboxed Kata Container.

About Memory Usage and Allocation

As we saw in the last section, containers running in the Kata sandbox have less memory available to them than is available on the host worker node. This can be controlled through the use of Kubernetes requests and limits.

By adding a requests and limits section to our application deployment we can increase the amount of resources available for our container. For example, by setting a request of 3Gi and 2000m and a limit of 4Gi and 4000m (as shown below)

resources:

requests:

memory: "3Gi"

cpu: "2000m"

limits:

memory: "4Gi"

cpu: "4000m"

If you run the commands from the Validate we are running Kata Containers section again, you will find that we now have 6Gi of memory available to our container and 5 CPU. This is because by default, Kata Containers will allocate 2Gi of RAM and 1 CPU for use, and adding limits will add to that base 2Gi/1CPU by the limit you are asking for.

Summary

OpenShift sandboxed containers Operator can be used to install Kata Containers simply and easily into your existing bare metal OpenShift platform. For applications and workloads that require extreme sandboxing to ensure the security of your environment, it takes very little effort to convert your existing Kubernetes deployment to the Kata runtime further isolating and securing the running application.