UPDATE: An updated blog post on this topic has been written and is available here: Creating a storage network in OpenShift

Introduction

OpenShift Container Platform and OpenShift Data Foundations can supply all your data storage needs, however sometimes you want to leverage an external storage array directly using storage protocols such as NFS or iSCSI. In many cases these storage networks will be served from dedicated network segments or VLANs and use dedicated network ports or network cards to handle the traffic.

On traditional Operating Systems like RHEL, you would use tools such as nmcli and network-manager to configure settings such as MTU and or create bonded connections, but in Red Hat Core OS, these tools are not directly available to you. So how does one configure these advanced network settings in OpenShift and Red Hat Core OS? The answer is the NMState Operator.

The Kubernetes NMState Operator provides a Kubernetes API for performing state-driven network configuration across the OpenShift Container Platform cluster’s nodes with NMState. The Kubernetes NMState Operator provides users with functionality to configure various network interface types, DNS, and routing on cluster nodes. Additionally, the daemons on the cluster nodes periodically report on the state of each node’s network interfaces to the API server. As of OpenShift 4.9 this feature is still in Tech-Preview. It should be released as Generally Available (GA) in the near future as part of a future release of OpenShift Container Platform.

In this blog post we will show how to install and use the NMState Operator to configure a secondary network device with an MTU of 9000, (also sometimes referred to as Jumbo Frames) using the NMState Operator. We will also demonstrate how to test that the new setting is working, and that your physical network layer is properly configured to take advantage of this setting.

Prerequisites

In order to use the NMState Operator you will need an OpenShift cluster that is version 4.6 or higher. You will also need to identify which nodes in your cluster will need access to the Storage Network and ensure that a secondary network card is assigned to each node and has an IP address.

You should also confirm that your physical network is properly configured to handle Jumbo Frames before proceeding with these steps. We will go through some quick steps to validate that the configuration is working properly later in the post, but you should validate that your network can handle this configuration before proceeding.

Installing the NMState Operator

In order to take advantage of the NMState Operator you will need to install it on your cluster. This can be done through the OpenShift Console UI or from the command line.

Installing from the GUI

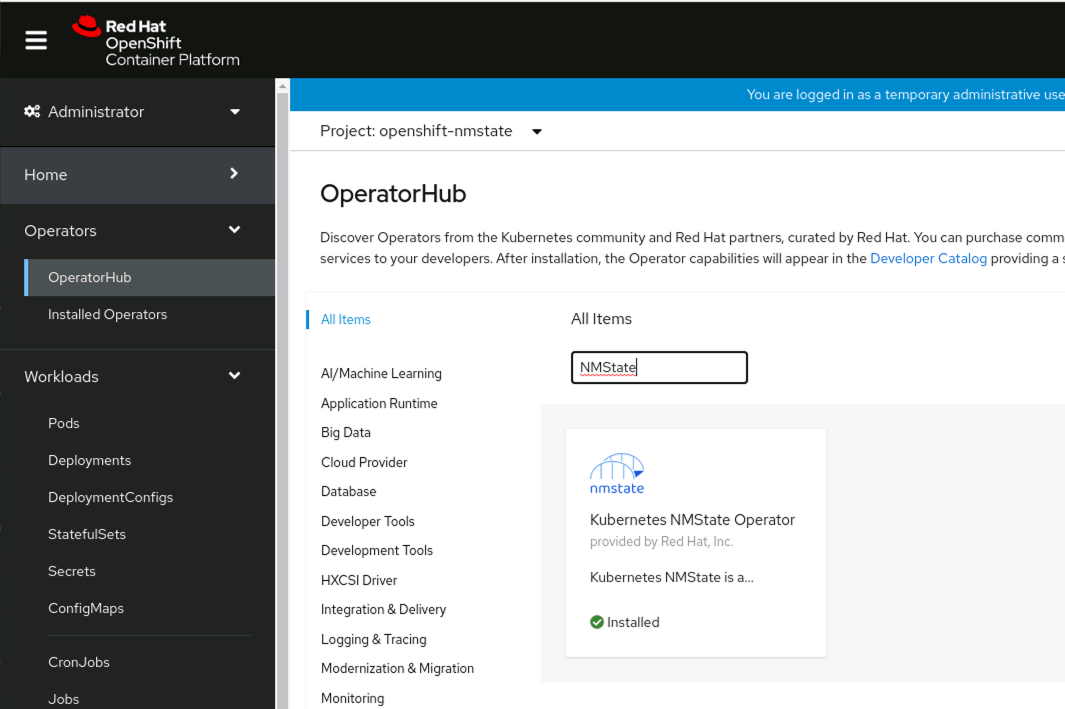

Start by logging into your cluster through the GUI with a user that has Cluster Administrator privileges. Once logged in, select “Operators -> Operator Hub” and search for NMState

Select the Kubernetes NMState Operator and Select Install. Under Installed Namespace, ensure the namespace is openshift-nmstate. If openshift-nmstate does not exist in the combo box, click on Create Namespace and enter openshift-nmstate in the Name field of the dialog box and press Create.

Configuring the Secondary Interface Card

Once NMState is installed in your cluster you will need to identify the network interface name that corresponds to your iSCSI network interface. Methods for identifying the proper interface can vary. In this post, we will identify the card based on IP address, however, you can also use the MAC address to identify the proper card or cards.

Identifying your Secondary Network Interface Name

Start by connecting to one of your worker nodes either through SSH or the oc debug command

$ oc get nodes

NAME STATUS ROLES AGE VERSION

ocp47-89pwv-master-0 Ready master 7d v1.20.0+9689d22

ocp47-89pwv-master-1 Ready master 7d v1.20.0+9689d22

ocp47-89pwv-master-2 Ready master 7d v1.20.0+9689d22

ocp47-89pwv-worker-9hjtn Ready worker 16h v1.20.0+9689d22

ocp47-89pwv-worker-9mbkt Ready worker 7d v1.20.0+9689d22

ocp47-89pwv-worker-s2bbm Ready worker 7d v1.20.0+9689d22

$ oc debug node/ocp47-89pwv-worker-9hjtn

Once connected to your worker node, run ip address show and identify the interface that is on the Storage Network:

# ip address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens192: <BROADCAST,MULTICAST,ALLMULTI,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether 00:50:56:86:aa:32 brd ff:ff:ff:ff:ff:ff

3: ens224: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:86:4f:44 brd ff:ff:ff:ff:ff:ff

inet 172.16.20.103/24 brd 172.16.20.255 scope global dynamic noprefixroute ens224

valid_lft 417sec preferred_lft 417sec

inet6 fe80::250:56ff:fe86:4f44/64 scope link noprefixroute

valid_lft forever preferred_lft forever

In the example above, our Storage Network is using the “172.16.20.0/24” network, so our target interface is ens224. We will use this information in the next step.

NOTE: If your cluster is made up of multiple types of servers using different network adapters, you will need to identify each card type separately and create a NodeNetworkConfigurationPolicy for each card type.

Creating a Node Network Configuration Policy

Now that we have identified the network interface that we will be using, we can create our NodeNetworkConfigurationPolicy (NNCP). Using your favorite text editor create a file called storage-network-nmcp.yml and put paste the following YAML into it:

apiVersion: nmstate.io/v1alpha1

kind: NodeNetworkConfigurationPolicy

metadata:

name: storage-network-nncp

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: ens224

type: ethernet

state: up

ipv4:

dhcp: true

enabled: true

mtu: 9000

Update the “name: ens224” section as well as the “mtu: 9000” section to match your Interface name as found in the last step as well as your proper MTU settings.

NOTE: If you have multiple adaptor types or interface names, you will need to create multiple NodeNetworkConfigurationPolicies one per interface name, and then use the nodeSelector and Node Labels to properly target each network interface type.

Applying your Configuration

We can now apply the NodeNetworkConfigurationPolicy we created in the last step to the cluster. Use the oc command to apply the yaml to your cluster

$ oc create -f storage-network-nmcp.yml

nodenetworkconfigurationpolicy.nmstate.io/storage-network-nncp created

The NMState Operator will now take over and apply the configuration to your cluster. To check on the state of the changes we use the oc get nnce command:

$ oc get nnce

NAME STATUS

ocp47-89pwv-master-0.ens224-mtu NodeSelectorNotMatching

ocp47-89pwv-master-1.ens224-mtu NodeSelectorNotMatching

ocp47-89pwv-master-2.ens224-mtu NodeSelectorNotMatching

ocp47-89pwv-worker-9hjtn.ens224-mtu SuccessfullyConfigured

ocp47-89pwv-worker-9mbkt.ens224-mtu SuccessfullyConfigured

ocp47-89pwv-worker-s2bbm.ens224-mtu SuccessfullyConfigured

When all nodes show as “Successfully Configured” you can move on to the next steps.

Validating your New Settings

We can now check to ensure that the settings were properly applied at the node level, and test end-to-end connectivity with a JumboFrames ping.

Start by connecting to the worker node we used in the previous section.

$ oc get nodes

NAME STATUS ROLES AGE VERSION

ocp47-89pwv-master-0 Ready master 7d v1.20.0+9689d22

ocp47-89pwv-master-1 Ready master 7d v1.20.0+9689d22

ocp47-89pwv-master-2 Ready master 7d v1.20.0+9689d22

ocp47-89pwv-worker-9hjtn Ready worker 16h v1.20.0+9689d22

ocp47-89pwv-worker-9mbkt Ready worker 7d v1.20.0+9689d22

ocp47-89pwv-worker-s2bbm Ready worker 7d v1.20.0+9689d22

$ oc debug node/ocp47-89pwv-worker-9hjtn

Check to ensure that the network interface shows the new MTU by running the ip address show command again.

# ip address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens192: <BROADCAST,MULTICAST,ALLMULTI,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether 00:50:56:86:aa:32 brd ff:ff:ff:ff:ff:ff

3: ens224: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:86:4f:44 brd ff:ff:ff:ff:ff:ff

inet 172.16.20.103/24 brd 172.16.20.255 scope global dynamic noprefixroute ens224

valid_lft 417sec preferred_lft 417sec

inet6 fe80::250:56ff:fe86:4f44/64 scope link noprefixroute

valid_lft forever preferred_lft forever

NOTE: The MTU for the ens224 adapter now has a value of 9000 as we specified in the NodeNetworkConfigurationPolicy.

We can conduct an “end-to-end” test validating that our JumboFrames setting is working at the networking layer by pinging your storage array. You will need to know the IP address of your storage array before running the next step. In the example command, our storage array is using IP address 172.16.20.10

# ping -M do -s 8972 -c 5 172.16.20.10

PING 172.16.20.10 (172.16.20.10) 8972(9000) bytes of data.

8980 bytes from 172.16.20.10: icmp_seq=1 ttl=64 time=0.569 ms

8980 bytes from 172.16.20.10: icmp_seq=2 ttl=64 time=0.587 ms

8980 bytes from 172.16.20.10: icmp_seq=3 ttl=64 time=0.804 ms

8980 bytes from 172.16.20.10: icmp_seq=4 ttl=64 time=0.641 ms

8980 bytes from 172.16.20.10: icmp_seq=5 ttl=64 time=0.717 ms

--- 172.16.20.10 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4091ms

rtt min/avg/max/mdev = 0.569/0.663/0.804/0.091 ms

If your ping command is successful congratulations, your network interface is now properly configured for Jumbo Frames.

You may also get one of two errors. If you get an error stating ping: local error: Message too long, mtu=9000 check the settings on your node again, this error indicates that the machine’s MTU is not configured correctly.

The other error that can happen is packet loss and ping failure which will look like this:

# ping -M do -s 8972 -c 5 172.16.20.10

PING 172.16.20.10 (172.16.20.10) 8172(8200) bytes of data.

--- 172.16.20.10 ping statistics ---

5 packets transmitted, 0 received, 100% packet loss, time 4116ms

This indicates that the physical network layer is not properly configured for ethernet packets that are 9000 bytes in size. Check your networking equipment, or contact your friendly network administrator to see if they can help fix the issue.

Advanced Configuration - Creating a Bond

What if you are looking to do something a little more complicated, such as a bonded pair of network interfaces to ensure that you have network redundancy. This can also be achieved with the use of the NMState Operator and a NodeNetworkConfigurationPolicy. You will need to know the interface names for each of the interfaces that you want to create a bond for.

We will update our previous NodeNetworkConfigurationPolicy file _storage-network-nmcp.yml_to add in the network bond and preserve our MTU setting of 9000. In the example configuration file below, ens224 and ens256 are the two interfaces that are connected to our storage network.

apiVersion: nmstate.io/v1beta1

kind: NodeNetworkConfigurationPolicy

metadata:

name: storage-network-nncp

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: bond0

type: bond

state: up

ipv4:

dhcp: true

enabled: true

link-aggregation:

mode: active-backup

options:

miimon: '140'

port:

- ens224

- ens256

mtu: 9000

NOTE: For additional information about creating a Bond and the options you can use here, see Example: Bond interface node network configuration policy

You can use the same validation steps from the previous section to validate that your new configuration is correct Validating your New Settings

Summary

The NMState Operator gives OpenShift administrators much more advanced control over additional network cards that are installed in your worker nodes. The examples shown here focus around the use of remote storage and a Storage Network, but the steps outlined can be used for other advanced networking scenarios as seen in the Telecommunications sector, and NMState is used extensively in OpenShift Virtualization and Kubevirt.

References

Installing the Kubernetes NMState Operator Example NMState Policies