Introduction

While working with OpenShift Routes recently, I came across a problem with an application deployment that was not working. OpenShift was returning an “Application is not Available” page, even though the application pod was up, and the service was properly configured and mapped. After some additional troubleshooting, we were able to trace the problem back to how the OpenShift router communicates with an application pod. Depending on your route type, OpenShift will either use HTTP, HTTPS or passthrough TCP to communicate with your application. By better understanding the traffic flow and the protocol used, we were able to quickly resolve the issue and get the application up and running. So with this in mind, I figured it would make sense to share this experience so others could benefit from this experience.

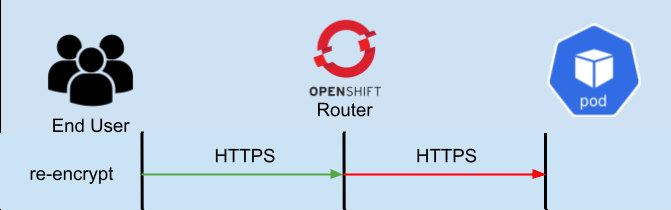

OpenShift Router Configurations

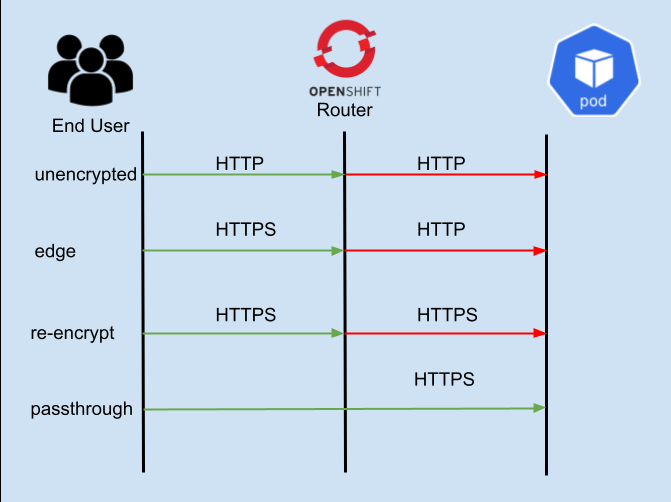

Let’s start by talking about the four main configuration types for an OpenShift Router:

- “Unencrypted” - This is the simplest configuration, and uses no encryption. The router talks to the hosted application via HTTP.

- “Edge” - In edge configuration, the user connects to the OpenShift Router over HTTPS, and then the OpenShift router connects to your service via HTTP. This is a very important detail, so we will state it again… the OpenShift router connects to your application via HTTP protocol.

- “Re-encrypt” - In re-encrypt mode, the user connects to the OpenShift Router over HTTPS, and then the OpenShift router makes a new proxy connection to your application over HTTPS. For this to work, your application must support HTTPS/TLS and the OpenShift router must be able to trust the certificate that is being used by your application. More on this below.

- “Passthrough” - In passthrough mode, the OpenShift router is not a part of the TLS handshake at all, the end user/client creates a secure connection directly with the Kubernetes pod, bypassing (or passing-through) the OpenShift router.

The image above will help to visualize these different connections. The arrows in Green show the client connection and where the connection is terminated. The arrows in Red show the connection from the OpenShift router to the application pod. So for example, in edge the client TLS connection terminates at the OpenShift router, and in passthrough it terminates at the Kubernetes pod.

Next up, lets try out these different router configurations and see how they work in practice.

Prerequisites

You will need an OpenShift cluster up and running to fully test this procedure. In addition you will require the following:

- oc - The OpenShift client, download from here

- openssl - Use your package manager to install openssl on your client (it may already be installed)

Trying this in practice

So what does this look like in practice? We will use a very simple go program that creates a HTTP server on port 8080 and an HTTPS server on port 8443. You can create your own image from this, or use a pre-published image of this application from here: markd/testserver. This container image will automatically generate a new Certificate every time it starts. The full source for this example program including the Dockerfile used to create the published Quay.io container can be found here.

package main

import (

"fmt"

"log"

"net/http"

)

func main() {

mux := http.NewServeMux()

mux.HandleFunc("/", func(res http.ResponseWriter, req *http.Request) {

fmt.Fprint(res, "Hello Custom World!")

})

// setup routes for mux // define your endpoints

errs := make(chan error, 1) // a channel for errors

go serveHTTP(mux, errs) // start the http server in a thread

go serveHTTPS(mux, errs) // start the https server in a thread

log.Fatal(<-errs) // block until one of the servers writes an error

}

func serveHTTP(mux *http.ServeMux, errs chan<- error) {

errs <- http.ListenAndServe(":8080", mux)

}

func serveHTTPS(mux *http.ServeMux, errs chan<- error) {

errs <- http.ListenAndServeTLS(":8443", "/app/localhost.crt", "/app/localhost.key", mux)

}

NOTE: If you choose to build your own container image, you will need to generate the

/app/localhost.crtand/app/localhost.keyfiles for the application to run.

Now lets create an instance of this application and the associated services so that we can do our testing. Create a file called “testdeployment.yaml” with the following contents:

apiVersion: apps/v1

kind: Deployment

metadata:

name: testserver

namespace: ocpocroutes

labels:

app: testserver

spec:

replicas: 1

selector:

matchLabels:

app: testserver

template:

metadata:

labels:

app: testserver

spec:

containers:

- name: testserver

image: quay.io/markd/testserver:latest

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 8080

- name: https

containerPort: 8443

and then apply this file to your cluster with the oc command:

$ oc new-project ocproutes

$ oc create -f testdeployment.yaml

$ oc get pod

and now lets define the svc ports, by creating a file called testsvs.yaml:

kind: Service

apiVersion: v1

metadata:

name: testserver

namespace: ocpocroutes

spec:

ports:

- name: testserver-webport

protocol: TCP

port: 8080

targetPort: 8080

- name: testserver-secure

protocol: TCP

port: 8443

targetPort: 8443

internalTrafficPolicy: Cluster

type: ClusterIP

selector:

app: testserver

and apply it to your cluster:

$ oc create -f testsvs.yaml

service/testserver created

$ oc get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

testserver ClusterIP 172.30.81.186 <none> 8080/TCP,8443/TCP 3s

OK, with our application running and our services defined, lets start making some routes.

Creating Routes

We will use the oc command for creating all our routes, they can also be created through yaml files, and examples of the yaml for each of the routes we will create today can be found here.

Simple HTTP route

We will start with the most simple route

$ oc expose service testserver --port=8080

$ oc get route

Now lets test:

$ curl http://testserver-testserver.apps.acm.xphyrlab.net

Hello Custom World!

But that is not very secure so lets try using edge encryption which leverages the OpenShift wildcard certificate.

Edge Encrypted

$ oc create route edge edgetype --service testserver --port=8080

route.route.openshift.io/edgetype created

$ oc get route

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

edgetype edgetype-testserver.apps.acm.xphyrlab.net testserver 8080 edge None

testserver testserver-testserver.apps.acm.xphyrlab.net testserver 8080 None

And now lets try connecting to that new route

$ curl https://edgetype-testserver.apps.acm.xphyrlab.net

curl: (60) SSL certificate problem: self-signed certificate in certificate chain

More details here: https://curl.se/docs/sslcerts.html

curl failed to verify the legitimacy of the server and therefore could not

establish a secure connection to it. To learn more about this situation and

how to fix it, please visit the web page mentioned above.

Since the cluster I am using for testing has a self signed certificate we need to ignore the certificate for now to connect:

$ curl -k https://edgetype-testserver.apps.acm.xphyrlab.net

Hello Custom World!

Edge but to the wrong port

What would happen if we tried to connect to the applications HTTPS port using the edge type route:

$ oc create route edge edgetypewrongport --service testserver --port=8443

route.route.openshift.io/edgetypewrongport created

$ oc get route

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

edgetype edgetype-testserver.apps.acm.xphyrlab.net testserver 8080 edge None

edgetypewrongport edgetypewrongport-testserver.apps.acm.xphyrlab.net testserver 8443 edge None

testserver testserver-testserver.apps.acm.xphyrlab.net testserver 8080 None

Lets connect to wrong port:

$ curl -k https://edgetypewrongport-testserver.apps.acm.xphyrlab.net

Client sent an HTTP request to an HTTPS server.

So what did we get there? The Go http library is smart enough to know when an HTTP connect is sent to an HTTPS server and gives back the error “Client sent an HTTP request to an HTTPS server.” Not all web servers will do this though. Some servers will send a 302 redirect, and some will just drop the connection. This can lead to strange behavior from OpenShift and the OpenShift router. You may get errors stating that the application is unavailable depending on how your application handles HTTP traffic on an HTTPS port.

Passthrough

We can create a “passthrough” connection, which will leverage the Certificate that is inside the application pod.

$ oc create route passthrough passthrough --service testserver --port=8443

route.route.openshift.io/passthrough created

$ oc get route

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

edgetype edgetype-testserver.apps.acm.xphyrlab.net testserver 8080 edge None

edgetypewrongport edgetypewrongport-testserver.apps.acm.xphyrlab.net testserver 8443 edge None

passthrough passthrough-testserver.apps.acm.xphyrlab.net testserver 8443 passthrough None

testserver testserver-testserver.apps.acm.xphyrlab.net testserver 8080 None

Now lets connect to that

$ curl -k https://passthrough-testserver.apps.acm.xphyrlab.net

Hello Custom World!

To validate that we are using the certificate inside the pod, lets get the certificate information

$ openssl s_client -showcerts -servername \

passthrough-testserver.apps.acm.xphyrlab.net -connect \

passthrough-testserver.apps.acm.xphyrlab.net:443 </dev/null

...

---

Server certificate

subject=CN = localhost

issuer=CN = localhost

---

There will be a bunch of output, but what we are looking for is the “subject=CN” to be set to localhost (how the certificate is created inside the pod). Compare this to what we get if we check the “subject=CN” for our wildcard route:

$ openssl s_client -showcerts \

-servername edgetype-testserver.apps.acm.xphyrlab.net \

-connect edgetype-testserver.apps.acm.xphyrlab.net:443 </dev/null

You should now see that the subject=CN with a value of “subject=CN = *.<your cluster name here>”.

ReEncrypted Route

So what if we want to use the OpenShift cluster wildcard certificate, BUT we also want all traffic inside the OpenShift SDN to be encrypted… Then in this case we need to do a “reencrypt” route type. In order to do this, we need to tell the OpenShift router to trust the unsigned certificate that we are serving up. We can get this using the openssl command:

$ echo | openssl s_client -servername \

passthrough-testserver.apps.acm.xphyrlab.net \

-connect passthrough-testserver.apps.acm.xphyrlab.net:443 2>/dev/null | openssl x509 > destcert.pem

$ cat destcert.pem

-----BEGIN CERTIFICATE-----

MIIFCTCCAvGgAwIBAgIUPMc4R60VcjfQkb4Dh3fHpgZK+BowDQYJKoZIhvcNAQEL

...

v5kWNzMVn+opQHhWo9jZJPQEubnbTUqbDy9YBLdrMwNIo2WusYWWWsz7f4kHM3aX

hlPf0VtjxPkKxGajOW2lq4PIE+mAnUa761Z/FY0EIQXi2OOCtv9AplW5bcAx

-----END CERTIFICATE-----

We can use this information to create our re-encrypt route.

$ oc create route reencrypt reencrypt --service testserver --port=8443 --dest-ca-cert=./destcert.pem

route.route.openshift.io/reencrypt created

$ oc get route

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

edgetype edgetype-testserver.apps.acm.xphyrlab.net testserver 8080 edge None

edgetypewrongport edgetypewrongport-testserver.apps.acm.xphyrlab.net testserver 8443 edge None

passthrough passthrough-testserver.apps.acm.xphyrlab.net testserver 8443 passthrough None

reencrypt reencrypt-testserver.apps.acm.xphyrlab.net testserver 8443 reencrypt None

testserver testserver-testserver.apps.acm.xphyrlab.net testserver 8080 None

$ curl -k https://reencrypt-testserver.apps.acm.xphyrlab.net

Hello Custom World!

To validate that we are using the certificate inside the pod, lets get the certificate information

$ openssl s_client -showcerts \

-servername reencrypt-testserver.apps.acm.xphyrlab.net \

-connect reencrypt-testserver.apps.acm.xphyrlab.net:443 </dev/null

...

---

Server certificate

subject=CN = *.apps.acm.xphyrlab.net

issuer=CN = ingress-operator@1658519999

---

...

You will notice in the output that the certificate that is being used is the “wildcard” cluster certificate. This indicates that the OpenShift router is connecting securely to port 8443 (HTTPS port) and then re-encrypting the data and serving it up using the wildcard certificate from the cluster.

Conclusion

OpenShift supplies a simple but powerful way to make your OpenShift hosted applications available to your end users/clients via OpenShift Routes. Understanding the different methods that the OpenShift router uses to connect to your application, and the implications to your end user’s perspective is key to making the best us of OpenShift and understanding how to troubleshoot it when you have an issue.