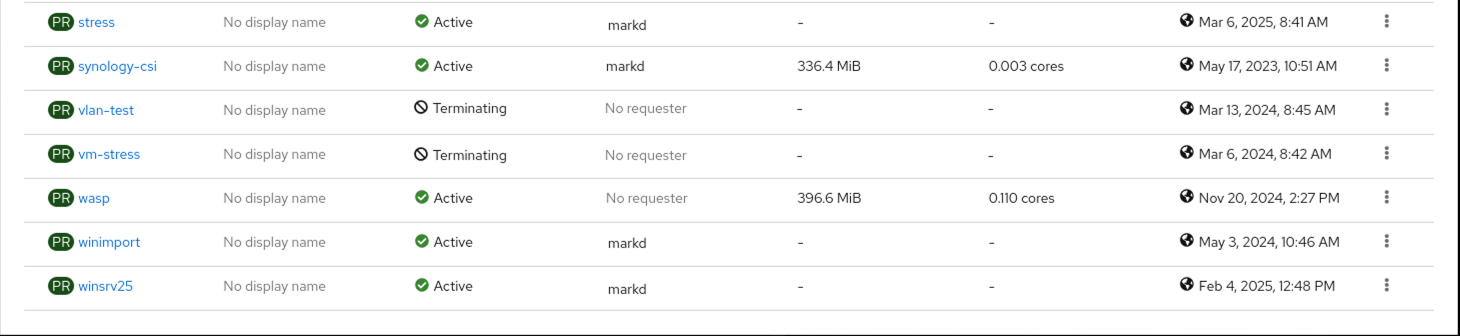

This is going to be another quick blog post. I was working on my lab OpenShift cluster and came across two Project/Namespaces that were stuck in a terminating state.

So whats a OpenShift admin to do? That will be the topic of today’s blog post. Read on if you want to know one way to handle this.

Troubleshooting

These Terminating namespaces had obviously been there for a while, but there was no clear or evident reason for why they were stuck in this state. So I did what any good OpenShift admin would do, use FORCE:

No, not that force, this --force:

$ oc delete project vlan-test --force

Warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

namespace "vlan-test" force deleted

I checked back, to see if the project was still there, and low-and-behold… it remained:

$ oc get project vlan-test

NAME DISPLAY NAME STATUS

vlan-test Terminating

So, I thought to myself, “OK, I have seen this before, some object was left behind, lets go delete it”…

$ oc get all -n vlan-test

Warning: apps.openshift.io/v1 DeploymentConfig is deprecated in v4.14+, unavailable in v4.10000+

Warning: kubevirt.io/v1 VirtualMachineInstancePresets is now deprecated and will be removed in v2.

No resources found

No resources found? That cant be right. Well it is. The command oc get all does not actually return ALL, it only returns all of a subset of Kubernetes objects, and it does not return any CustomResources. So how are we to know if there is a CustomResource that is sticking around that is keeping us from deleting this namespace? Well we are going to have to string some commands together for that one.

Finding CustomResources in a namespace

In order to find the CustomResource that might be causing the issue, we are going to make use of the oc and xargs commands to list all api-resources in our cluster and then look for them in our troublesome namespace one at a time…

oc api-resources --verbs=list --namespaced -o name | xargs -n 1 oc get --show-kind --ignore-not-found -n <namespace name>

This command will loop through ALL the api-resources that are defined on your cluster, and explicitly run the oc get command against the namespace in question to list them out. This command can take some time to run, and may even look like it is hung. Taking a quick look at my cluster, there are over 380 api-resources defined on my cluster, and the command above needs to query each and every one of them. So lets take a look at the vlan-test project and see what is there.

$ oc api-resources --verbs=list --namespaced -o name | xargs -n 1 oc get --show-kind --ignore-not-found -n vlan-test

NAME ROLE AGE

rolebinding.rbac.authorization.k8s.io/open-cluster-management:managedcluster:vlan-test:work ClusterRole/open-cluster-management:managedcluster:work 127d

NAME AGE

manifestwork.work.open-cluster-management.io/addon-application-manager-deploy-0 127d

manifestwork.work.open-cluster-management.io/addon-cert-policy-controller-deploy-0 127d

Well look at that, there is a few things left behind. In this case we have three CustomResources that are not being cleaned up by their associated controller. All we need to do now is go ahead and delete these objects. Since these objects have been hanging around for a while, it can be assumed that they we marked for deletion, but there is a Finalizer that is keeping them from being cleaned up.

Why is the CustomResource controller not deleting these objects? Well that can depend on many factors, and really depends on the controller itself. We will not dive into the WHY here, as it can vary from controller to controller. If you want to dig in deeper, I suggest checking the logs of the associated controller for the details. This brings me to my next point:

WARNING: We are about to go around the CustomResource controller and force the removal of these objects. This might cause issues, and leave orphaned objects. If you are not sure you should proceed be sure to open a support ticket and validate this change before moving forward. You have been WARNED!

OK with the warning out of the way, lets go ahead and remove the finalizer from the object, and it should start the cleanup process.

Removing the Finalizer

In order to remove the finalizer, we need to patch the object and set the finalizers to “NULL”. The easiest way to do this is from the command line using the following command:

oc patch <CustomResource name> -p '{ "metadata": { "finalizers": null }}' --type merge -n <namespace>

So using the example of the CustomResources that are sticking around above, lets patch each one individually so that they get removed from the cluster.

$ oc patch rolebinding.rbac.authorization.k8s.io/open-cluster-management:managedcluster:vlan-test:work -p '{ "metadata": { "finalizers": null }}' --type merge -n vlan-test

rolebinding.rbac.authorization.k8s.io/open-cluster-management:managedcluster:vlan-test:work patched

$ oc patch manifestwork.work.open-cluster-management.io/addon-application-manager-deploy-0 -p '{ "metadata": { "finalizers": null }}' --type merge -n vlan-test

manifestwork.work.open-cluster-management.io/addon-application-manager-deploy-0 patched

$ oc patch manifestwork.work.open-cluster-management.io/addon-cert-policy-controller-deploy-0 -p '{ "metadata": { "finalizers": null }}' --type merge -n vlan-test

manifestwork.work.open-cluster-management.io/addon-cert-policy-controller-deploy-0

At this point we will give everything a minute to catch up, and now lets check for our troublesome project:

$ oc get project vlan-test

Error from server (NotFound): namespaces "vlan-test" not found

HUZZAH! We have successfully removed a namespace/project that has remained in a Terminating state for a very long time.

Conclusion

There are times when a namespace or project may get stuck in a terminating state, and hang around forever. Not only can this make managing your cluster more difficult if they start to build up, the Kubernetes API is also spending time trying to delete these namespaces which can cause delays for other API calls, especially if you get yourself in a situation where there are multiple namespaces stuck in this state.