In the last blog post Dealing with a Lack of Entropy on your OpenShift Cluster we deployed the rng-tools software as a DaemonSet in a cluster. By using a DaemonSet, we took advantage of the tools that Kubernetes gives us for deploying an application to all targeted nodes in a cluster. This worked well for getting the rng daemon up and running on nodes that required it, but not all software will work this way. What if we need to install or update a package on the host Red Hat CoreOS (RHCOS) boot image? In the past this was always frowned upon/impossible. RHCOS is an immutable OS delivered to you by Red Hat that can not be modified.

Well not any more. With the release of OCP 4.12, “CoreOS Image Layering” was introduced as Tech Preview and by 4.13 was a GA feature. Image layering allows you to extend the functionality of your base RHCOS image by layering additional software images onto the base image without modifying the base RHCOS image. Instead, it creates a custom layered image that includes all RHCOS functionality and any additional functionality you add, and then deploys it to specific nodes you specify in the cluster. This layering is achieved using the same container image build process we all know and love the “Dockerfile” (or the more vendor independently named, “Containerfile”).

In this blog post we will use the same rng daemon use case as an example and we will update the RHCOS image to include this daemon as part of the CoreOS install. We will discuss the process used to build the new image layer, and some of the operational implications of using a custom OS image.

Prerequisites

In order to try this out in your environment, you will need a container image repository that is trusted and configured for your openshift cluster. For more information on how to configure an external registry to be trusted see the documentation Configuring additional trust stores for image registry access.

In addition you will need the following:

- You will need your pull secret to pull the base container image from the Red Hat image registry.

- You will also need a place to run podman to build the image. I suggest a RHEL9 workstation or server with a valid RHEL subscription if you plan to install software from the Red Hat rpm repositories

Warning

Before we begin, a quick call out. This is directly from the Red Hat documentation and is very important to understand before moving forward with image layers:

As soon as you apply the custom layered image to your cluster, you effectively take ownership of your custom layered images and those nodes. While Red Hat remains responsible for maintaining and updating the base RHCOS image on standard nodes, you are responsible for maintaining and updating images on nodes that use a custom layered image. You assume the responsibility for the package you applied with the custom layered image and any issues that might arise with the package.

Building our new Base Image

To start, we are going to need to know the version of RHCOS you are currently running in your cluster. This can be retrieved from the cluster by running the following command:

$ oc adm release info --image-for rhel-coreos

quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:4c7f78f9dae897f2315b290a641dc7c4f4bffd41fe19482c85274e760ea52e8c

Now that we have identified the base container image for our update, we will need to create a Containerfile (ie. a Dockerfile). This file works just like a standard Dockerfile, using all the same syntax because it is just a Dockerfile. For our use case, this will be a very simple ContainerFile, with just one “RUN” command to install the required tools, enable the service, and commit the changes.

Start by creating a file called Containerfile with the following contents. Be sure to update the FROM statement with the version of RHCOS you got in the previous step:

FROM quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:4c7f78f9dae897f2315b290a641dc7c4f4bffd41fe19482c85274e760ea52e8c

RUN rpm-ostree install rng-tools && \

systemctl enable rngd && \

rpm-ostree cleanup -m && \

ostree container commit

A few things to note. First off, you will see that we are using the “rpm-ostree” command to install the packages, not “rpm”, or “yum” or “dnf”. To learn more about rpm-ostree, how it works and what additional options are available see the rpm-ostree documentation. We also need to commit the changes that we make to ostree, with the ostree container commit command at the end of the software install. You can see other examples of ContainerFiles in the official documentation RHCOS image layering.

With our Containerfile created, its time to build our new image.

$ build -t registry.xphyrlab.net/coreos/coreos-rngd:4.14.14 .

STEP 1/2: FROM quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:4c7f78f9dae897f2315b290a641dc7c4f4bffd41fe19482c85274e760ea52e8c

Trying to pull quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:4c7f78f9dae897f2315b290a641dc7c4f4bffd41fe19482c85274e760ea52e8c...

Error: creating build container: initializing source docker://quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:4c7f78f9dae897f2315b290a641dc7c4f4bffd41fe19482c85274e760ea52e8c: reading manifest sha256:4c7f78f9dae897f2315b290a641dc7c4f4bffd41fe19482c85274e760ea52e8c in quay.io/openshift-release-dev/ocp-v4.0-art-dev: unauthorized: access to the requested resource is not authorized

The build fails because we do not have a valid pull secret. See the Prerequisites section above, to get your pull-secret.yaml and then re-run the command, specifying the path to the pull-secret file you downloaded.

$ REGISTRY_AUTH_FILE=pull-secret.json podman build -t registry.xphyrlab.net/coreos/coreos-rngd:4.14.14 .

STEP 1/2: FROM quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:4c7f78f9dae897f2315b290a641dc7c4f4bffd41fe19482c85274e760ea52e8c

Trying to pull quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:4c7f78f9dae897f2315b290a641dc7c4f4bffd41fe19482c85274e760ea52e8c...

Getting image source signatures

Copying blob 8fdaabf3d77c done

STEP 2/2: RUN rpm-ostree install rng-tools && systemctl enable rngd && ostree container commit

Enabled rpm-md repositories: rhel-9-for-x86_64-baseos-rpms rhel-9-for-x86_64-appstream-rpms

Updating metadata for 'rhel-9-for-x86_64-baseos-rpms'...done

Updating metadata for 'rhel-9-for-x86_64-appstream-rpms'...done

Importing rpm-md...done

rpm-md repo 'rhel-9-for-x86_64-baseos-rpms'; generated: 2024-04-08T09:18:48Z solvables: 6486

rpm-md repo 'rhel-9-for-x86_64-appstream-rpms'; generated: 2024-04-08T12:28:13Z solvables: 18892

Resolving dependencies...done

Will download: 2 packages (112.8?kB)

Downloading from 'rhel-9-for-x86_64-baseos-rpms'...done

Installing 2 packages:

jitterentropy-3.4.1-2.el9.x86_64 (rhel-9-for-x86_64-baseos-rpms)

rng-tools-6.16-1.el9.x86_64 (rhel-9-for-x86_64-baseos-rpms)

Installing: jitterentropy-3.4.1-2.el9.x86_64 (rhel-9-for-x86_64-baseos-rpms)

Installing: rng-tools-6.16-1.el9.x86_64 (rhel-9-for-x86_64-baseos-rpms)

Created symlink /etc/systemd/system/multi-user.target.wants/rngd.service → /usr/lib/systemd/system/rngd.service.

COMMIT registry.xphyrlab.net/coreos/coreos-rngd:4.14.14

--> 5cbdd1eef5c8

Successfully tagged registry.xphyrlab.net/coreos/coreos-rngd:4.14.14

5cbdd1eef5c846459f079f4a9ca796850b4b40edcf1dfae585b8b0a4d30cece0

With the image built, we can now push that to our local image registry.

$ podman push registry.xphyrlab.net/coreos/coreos-rngd:4.14.14

Getting image source signatures

Copying blob e5be975dcc6e done

Copying blob e5be975dcc6e done

Copying config 5cbdd1eef5 done

Writing manifest to image destination

Congratulations, we now have a custom RHCOS image ready for deployment in your cluster.

Applying the updated image

In order to apply the image in your cluster, we are going to need to create a new MachineConfig that will apply the image to all targeted machines. We have covered MachineConfigs before in Understanding OpenShift MachineConfigs and MachineConfigPools so be sure to go back and take a look, if you need a refresher on MachineConfigs.

Create a file called custom-rhcos-image.yaml with the following contents. We will be targeting all “worker” nodes, in this example making sure to update the spec.osImageURL to point to your container registry.

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

labels:

machineconfiguration.openshift.io/role: worker

name: os-layer-custom-rng

spec:

osImageURL: registry.xphyrlab.net/coreos/coreos-rngd:4.14.14We can now apply this change to our cluster:

$ oc login

$ oc create -f custom-rhcos-image.yaml

machineconfig.machineconfiguration.openshift.io/os-layer-custom-rng

With the new machineConfig applied to our cluster, we will look at our MachineConfigPools to see how it is applied. Run the following command and note that the “worker” MachineConfigPool shows as “Updating”.

$ oc get mcp

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE

master rendered-master-6 True False False 3 3 3 0 8d

worker rendered-worker-b False True False 4 0 0 0 8d

Wait until the status shows “UPDATED” as a state “True” before continuing.

Verifying our change

When the MachineConfigPool update completes, we can log into one of the worker nodes and validate that the rngd service is installed and running:

$ oc debug node/acm-775pj-worker-gcbpw

Starting pod/acm-775pj-worker-gcbpw-debug-bm6wg ...

To use host binaries, run `chroot /host`

Pod IP: 172.16.25.108

If you don't see a command prompt, try pressing enter.

sh-4.4# chroot /host

sh-5.1# systemctl status rngd

● rngd.service - Hardware RNG Entropy Gatherer Daemon

Loaded: loaded (/usr/lib/systemd/system/rngd.service; enabled; preset: enabled)

Active: active (running) since Mon 2024-04-08 18:56:57 UTC; 7min ago

Main PID: 1026 (rngd)

Tasks: 1 (limit: 101774)

Memory: 3.5M

CPU: 18.760s

CGroup: /system.slice/rngd.service

└─1026 /usr/sbin/rngd -f --fill-watermark=0 -x pkcs11 -x nist -x qrypt -D daemon:daemon

Apr 08 18:56:57 acm-775pj-worker-gcbpw rngd[1026]: Disabling 9: Qrypt quantum entropy beacon (qrypt)

Apr 08 18:56:58 acm-775pj-worker-gcbpw rngd[1026]: Initializing available sources

Apr 08 18:56:58 acm-775pj-worker-gcbpw rngd[1026]: [hwrng ]: Initialization Failed

Apr 08 18:56:58 acm-775pj-worker-gcbpw rngd[1026]: [rdrand]: Enabling RDSEED rng support

Apr 08 18:56:58 acm-775pj-worker-gcbpw rngd[1026]: [rdrand]: Initialized

Apr 08 18:56:58 acm-775pj-worker-gcbpw rngd[1026]: [jitter]: JITTER timeout set to 5 sec

Apr 08 18:56:58 acm-775pj-worker-gcbpw rngd[1026]: [jitter]: Initializing AES buffer

Apr 08 18:57:03 acm-775pj-worker-gcbpw rngd[1026]: [jitter]: Unable to obtain AES key, disabling JITTER source

Apr 08 18:57:03 acm-775pj-worker-gcbpw rngd[1026]: [jitter]: Initialization Failed

Apr 08 18:57:03 acm-775pj-worker-gcbpw rngd[1026]: Process privileges have been dropped to 2:2

Applying custom configuration files for rngd

Now that rngd is an installed package on all worker nodes, and configured to run automatically at start up, what if we need to customize the configuration for the rngd daemon. You can create an additional MachineConfig that installs a configuration file onto the targeted machines. The blog post Understanding OpenShift MachineConfigs and MachineConfigPools gives some great examples of how to apply a custom file for configuration that you can build upon.

Updating the cluster

Keeping in mind that an OpenShift cluster upgrade includes both he platform updates as well as the OS on the machines, what happens to the nodes running your custom OS images when you upgrade your cluster? The simplest answer is “Nothing”; nothing happens to the machines that we have set a target machine image. Machines that are not targeted by our MachineConfig (in this case any nodes that are not “worker” nodes) will be upgraded to a new base OS version. The machines that are pointed to the specific image that we created will stay at that version. We can see this by running the following command after an upgrade:

$ oc get node -o=custom-columns=NODE:.metadata.name,"OS IMAGE":.status.nodeInfo.osImage

NODE OS IMAGE

acm-775pj-master-0 Red Hat Enterprise Linux CoreOS 414.92.202403191601-0 (Plow)

acm-775pj-master-1 Red Hat Enterprise Linux CoreOS 414.92.202403191601-0 (Plow)

acm-775pj-master-2 Red Hat Enterprise Linux CoreOS 414.92.202403191601-0 (Plow)

acm-775pj-worker-gcbpw Red Hat Enterprise Linux CoreOS 414.92.202402201520-0 (Plow)

acm-775pj-worker-l5hmt Red Hat Enterprise Linux CoreOS 414.92.202402201520-0 (Plow)

acm-775pj-worker-tt4kv Red Hat Enterprise Linux CoreOS 414.92.202402201520-0 (Plow)

NOTE: The OS IMAGE versions for the control plane and worker nodes differs. This indicates that we are in fact running an older version of the base OS on our worker nodes.

In order to rectify this issue, you will need to go back to the Building our new Base Image and Applying the updated image sections and follow the directions to update your custom image with the latest base image.

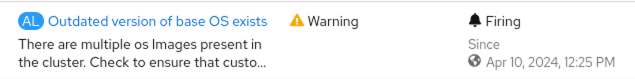

Custom Alerting for mismatched OS Images

While running commands manually may work to find outdated OS Images in your cluster, its not very efficient. Let’s automate this process, so that the OpenShift cluster will alert us if we are in a state where your OS Images are outdated. We can leverage the built in cluster monitoring to create an alert that fires when the number of OS versions deployed in your cluster is greater than 1 for more than 1 day. This alert definition would look something like this:

apiVersion: monitoring.openshift.io/v1

kind: AlertingRule

metadata:

name: os-version-mismatch

namespace: openshift-monitoring

spec:

groups:

- name: OS Version Mismatch

rules:

- alert: Outdated version of base OS exists

expr: count(count(last_over_time(node_os_info[1d])) by (pretty_name)) > 1

labels:

severity: warning

annotations:

message: There are multiple os Images present in the cluster. Check to ensure that custom OS images have been updated.

You can customize this alert for your own needs, and apply to the cluster with an oc apply -f os-version-mismatch.yaml.

NOTE: this will only catch the use case where you are only using a custom image on SOME of your cluster nodes. If you are using a custom image for all your nodes this will not alert you to outdated images.

This will result in an alert in the OpenShift console like so:

The Future of OS Image Layering

As you have seen, in it’s current iteration, OS Image Layering is a manual process that needs to be managed by the cluster Administrator. However in future releases of OpenShift this manual process is going to be addressed with a feature tentatively called “On Cluster Layering”. On Cluster Layering will allow OpenShift to manage the creation of custom images for you. When a new base OS image is released, OpenShift will build a new custom image, and deploy it as a part of the OpenShift upgrade process. Watch the release notes for future OpenShift releases to see when this new feature will be available.

Backing out the change/Cleanup

Backing this change out, and going back to the default RHCOS image is a simple process. We need only delete the “os-layer-custom-rng” MachineConfig and OpenShift will revert to the default RHCOS base image:

$ oc delete machineconfig/os-layer-custom-rng

machineconfig.machineconfiguration.openshift.io "os-layer-custom-rng" deleted

You can validate that the OS image has reverted by checking to ensure that the OS Image matches the other nodes in your cluster:

$ oc get node -o=custom-columns=NODE:.metadata.name,"OS IMAGE":.status.nodeInfo.osImage

NODE OS IMAGE

acm-775pj-master-0 Red Hat Enterprise Linux CoreOS 414.92.202403191601-0 (Plow)

acm-775pj-master-1 Red Hat Enterprise Linux CoreOS 414.92.202403191601-0 (Plow)

acm-775pj-master-2 Red Hat Enterprise Linux CoreOS 414.92.202403191601-0 (Plow)

acm-775pj-worker-gcbpw Red Hat Enterprise Linux CoreOS 414.92.202403191601-0 (Plow)

acm-775pj-worker-l5hmt Red Hat Enterprise Linux CoreOS 414.92.202403191601-0 (Plow)

acm-775pj-worker-tt4kv Red Hat Enterprise Linux CoreOS 414.92.202403191601-0 (Plow)

Conclusion

Between this post and the last one we have seen two different ways to extend the OpenShift nodes with additional system software. Each method has its own advantages and disadvantages from an operations/support perspective. If you have software or agents that can not work in a container, or that a vendor will not support unless it is running in the host namespace, then CoreOS Image Layering may be the solution that you are looking for. Regardless of which method you choose to deploy the system software, you can do so in a declarative “git-ops” friendly method with OpenShift.