Using the Synology K8s CSI Driver with OpenShift

By Mark DeNeve

This blog post has been updated with additional details and was originally published on 03-14-2022.

Adding storage to an OpenShift cluster can greatly increase the types of workloads you can run, including workloads such as OpenShift Virtualization, or databases such as MongoDB and PostgreSQL. Persistent volumes can be supplied in many different ways within OpenShift including using LocalVolumes, or OpenShift Data Foundation, or provided by an underlying Cloud Provider such as the vSphere provider. Storage providers for external storage arrays such as Pure CSI Driver, Dell, Infinidat CSI Driver and Synology CSI Driver also exist. While I do not have the a Pure Storage Array or an Infinibox in my home lab, I do have a Synology array, that supports iSCSI and this will be the focus of the blog. The Synology CSI driver supports the creation of ReadWriteOnce (RWO) persistent file volumes along with ReadWriteMany (RWX) persistent block volumes as well as the creation of snapshots on both these volume types.

NOTE: This post will focus on the official Synology driver, but there is another driver that may also work called the Democratic CSI.

Due to the security model of OpenShift, in order to deploy the Synology CSI driver in OpenShift we will need to add some additional permissions to the driver through SCC profiles and we will need to enable the iSCSId service on each node in order to mount the persistent volumes. We will use the tools given to us in OpenShift including MachineConfigs and SecurityContextConstraints to allow the driver run in OpenShift in this post.

Prerequisites

The Synology CSI driver works with DSM version 7.0 and above. You will need to have a Synology array that supports version 7.0. To see if your Synology Array can be upgraded to version 7.0 check the DSM 7.0 Release Notes. You will also need an OpenShift cluster to install the CSI driver on. The steps below have been tested on OpenShift 4.9, 4.11 and 4.12 but should work for OpenShift versions 4.7 and above. In addition you will need the following command line utilities:

- git

- oc

PreSteps

Red Hat CoreOS does not ship with the iSCSI daemon started by default. Because the Synology driver relies on the Kubernetes “CSI-Attacher” pod for mounting iSCSI devices we will need to enable the iSCSId daemon. We will leverage the OpenShift MachineConfigOperator (MCO) to enable iSCSId. The iSCSId service is already present on all OpenShift RHCOS machines, however it is disabled by default. The MachineConfig below updates systemd to enable the iSCSI service so that the CSI-Attacher can run iSCSI commands on the node. Start by creating a new file called “99-worker-custom-enable-iscsid.yml” and place the following contents in the file:

1---

2apiVersion: machineconfiguration.openshift.io/v1

3kind: MachineConfig

4metadata:

5 labels:

6 machineconfiguration.openshift.io/role: worker

7 name: 99-worker-custom-enable-iscsid

8spec:

9 config:

10 ignition:

11 version: 3.1.0

12 systemd:

13 units:

14 - enabled: true

15 name: iscsid.serviceNOTE: The MachineConfig listed above will apply to all worker nodes only. Depending on your specific cluster configuration you may need to adjust this label to properly select your required nodes. For a default install, this should work fine. Applying this change will reboot each individual worker node.

Apply the MachineConfig to your cluster by logging into your cluster with the oc command and then confirm that the change is applied to all of your nodes.

$ oc login

$ oc create -f 99-worker-custom-enable-iscsid.yml

$ oc get machineconfigpool

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE

master rendered-master-c5da True False False 3 3 3 0 34d

worker rendered-worker-09ba True False False 3 3 3 0 34d

Ensure that all worker nodes show as being updated prior to continuing. Look for the “MACHINECOUNT” and “UPDATEDMACHINECOUNT” numbers to match.

Install

With our worker-nodes updated to support iSCSI, we can now work on installing the CSI driver. Start by cloning the github repo for the Synology CSI Driver:

$ git clone https://github.com/SynologyOpenSource/synology-csi

$ cd synology-csi

Creating the Configuration File

We will need to create a configuration file which will contain the hostname of your Synology array, as well as the username and password to connect. Create a file called client-info.yml and put the following contents in it. Be sure to update the hostname, username, and password sections with the appropriate information for your array.

1---

2clients:

3 - host: <hostname or IP address of array>

4 port: 5000

5 https: false

6 username: <username>

7 password: <password>NOTE: You must use a user account that has full admin privileges on the Synology Array. This is a requirement of the Synology array as the Administrator is the only account with the proper permissions to create iSCSI luns. NOTE 2: Keep in mind that the “admin” account is disabled by default on modern releases of DSM. It is suggested that you create a dedicated account for this integration.

We will now create a new project for the synology-csi driver to run in and create a secret with the contents of the client-info.yml that we just created:

$ oc new-project synology-csi

$ oc create secret generic client-info-secret --from-file=client-info.yml

Create SCC and Apply to Cluster

Because we are using OpenShift, we need to address the Security Context Constraints or SCC. The SCC below will allow the components of the Synology CSI driver to properly operate in an OpenShift cluster. Create a file called synology-csi-scc.yml with the following contents:

1---

2kind: SecurityContextConstraints

3apiVersion: security.openshift.io/v1

4metadata:

5 name: synology-csi-scc

6allowHostDirVolumePlugin: true

7allowHostNetwork: true

8allowPrivilegedContainer: true

9allowedCapabilities:

10- 'SYS_ADMIN'

11defaultAddCapabilities: []

12fsGroup:

13 type: RunAsAny

14groups: []

15priority:

16readOnlyRootFilesystem: false

17requiredDropCapabilities: []

18runAsUser:

19 type: RunAsAny

20seLinuxContext:

21 type: RunAsAny

22supplementalGroups:

23 type: RunAsAny

24users:

25- system:serviceaccount:synology-csi:csi-controller-sa

26- system:serviceaccount:synology-csi:csi-node-sa

27- system:serviceaccount:synology-csi:csi-snapshotter-sa

28volumes:

29- '*'Create the SecurityContextConstraints by applying them to the cluster:

$ oc create -f synology-csi-scc.yml

Defining the Synology iSCSI Storage Class

We now need to determine how the Synology driver will handle PersistentVolumes once they are released, as well as what Synology volume the new iSCSI devices should be created on and what filesystem should be used when creating the new PersistentVolume. The syno-iscsi-storage-class.yml file determines this configuration. Using your favorite editor open the deploy/kubernetes/v1.20/storage-class.yml file from the Git repo. It will contain something similar to this:

1---

2apiVersion: storage.k8s.io/v1

3kind: StorageClass

4metadata:

5 name: synology-iscsi-storage

6 annotations:

7 storageclass.kubernetes.io/is-default-class: "true"

8provisioner: csi.san.synology.com

9parameters:

10 protocol: 'iscsi'

11 location: '/volume2'

12 dsm: '<ip address of your synology array>'

13 csi.storage.k8s.io/fstype: 'ext4'

14reclaimPolicy: Delete

15allowVolumeExpansion: trueIf you do not want the driver to use the default ‘volume1’ as your storage location be sure to update this section. You will also need to determine if you want the CSI driver to delete the volumes when they are no longer in use by setting the reclaimPolicy to Delete. Ensure that fsType is explicitly set to ext4 or xfs depending on your preference or you will have issues with filesystem permissions when you go to use the volumes created. If you plan to use the volume snapshot feature be sure to review the deploy/kubernetes/v1.20/snapshotter/volume-snapshot-class.yml as well and update as appropriate.

Defining the Synology SMB/CIFS Storage Class

The synology csi driver also supports the creation of a SMB/CIFS volume which works well when you are working with Windows Containers. We will create a second storage class for this type of driver. To start we will need to create a secret that contains the login account you want to use to connect to the SMB share. (NOTE: This does not need to be the same account used for the initial configuration). This account will be used to connect to the SMB share.

1---

2apiVersion: v1

3kind: Secret

4metadata:

5 name: cifs-csi-credentials

6 namespace: synology-csi

7type: Opaque

8stringData:

9 username: <username> # DSM user account accessing the shared folder

10 password: <password> # DSM user password accessing the shared folderNext, you will need to create a storageClass configuration for using the SMB protocol. Make sure that the node-stage-secret-name and node-stage-secret-namespace match the secret that you created in the previous step.

1---

2apiVersion: storage.k8s.io/v1

3kind: StorageClass

4metadata:

5 name: synology-smb-storage

6provisioner: csi.san.synology.com

7parameters:

8 protocol: 'smb'

9 location: '/volume2'

10 dsm: '<ip address of your synology array>'

11 csi.storage.k8s.io/node-stage-secret-name: "cifs-csi-credentials"

12 csi.storage.k8s.io/node-stage-secret-namespace: "synology-csi"

13reclaimPolicy: Delete

14allowVolumeExpansion: trueAs with the configuration for iSCSI, you should be sure to define the location in the storage class (eg. ‘volume2’) as your storage location. You will also need to determine if you want the CSI driver to delete the volumes when they are no longer in use by setting the reclaimPolicy to Delete.

Deploying the Driver

At this point we can use the files located in synology-csi/deploy/kubernetes/v1.20 to deploy the Kubernetes CSI Driver. We will apply each file individually.

# Start by applying the synology-csi controller

oc create -f deploy/kubernetes/v1.20/controller.yml

# Next we will apply the csi-driver definition

oc create -f deploy/kubernetes/v1.20/csi-driver.yml

# Next create the node handler

oc create -f deploy/kubernetes/v1.20/node.yml

# Finally create the storage class

oc create -f deploy/kubernetes/v1.20/syno-iscsi-storage-class.yml

# If you want to create a CIFS/SMB storage class apply this as well

# oc create -f deploy/kubernetes/v1.20/syno-cifs-storage-class.yml

# If you wish to use the snapshot feature we can also apply the snapshot driver

oc create -f deploy/kubernetes/v1.20/snapshotter/snapshotter.yaml

oc create -f deploy/kubernetes/v1.20/snapshotter/volume-snapshot-class.yml

NOTE: We are using the files from the v1.20 directory as this takes advantage of the v1 apis for persistent storage creation. If you are running an OpenShift cluster prior to version 4.7 you should use the files in the v1.19 directory.

Check to ensure that all of the pods are successfully deployed before proceeding:

$ oc get pods

NAME READY STATUS RESTARTS AGE

synology-csi-controller-0 4/4 Running 6 7d1h

synology-csi-node-9496p 2/2 Running 2 7d1h

synology-csi-node-w65h9 2/2 Running 2 7d1h

synology-csi-node-wpw2d 2/2 Running 2 7d1h

synology-csi-snapshotter-0 2/2 Running 2 6d19h

NOTE: The number of “synology-csi-node-xxxxx” pods you see will directly correlate to the total number of worker nodes deployed in your cluster.

Creating your first PV

With the Synology CSI driver installed we can now go ahead and create a Persistent Volume. We will create this persistent volume in a new namespace to make cleanup easier.

$ oc new-project pvctest

Create a new file called my-file-storage-claim.yml with the following in it.

1---

2kind: PersistentVolumeClaim

3apiVersion: v1

4metadata:

5 name: my-file-storage-claim

6 namespace: pvctest

7spec:

8 accessModes:

9 - ReadWriteOnce

10 resources:

11 requests:

12 storage: 2Gi

13 storageClassName: synology-iscsi-storage

14 volumeMode: FilesystemApply the file to your cluster to create a new PV:

$ oc create -f my-file-storage-claim.yml

persistentvolumeclaim/my-file-storage-claim created

We can check the status of the newly created PV by running the following command:

$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

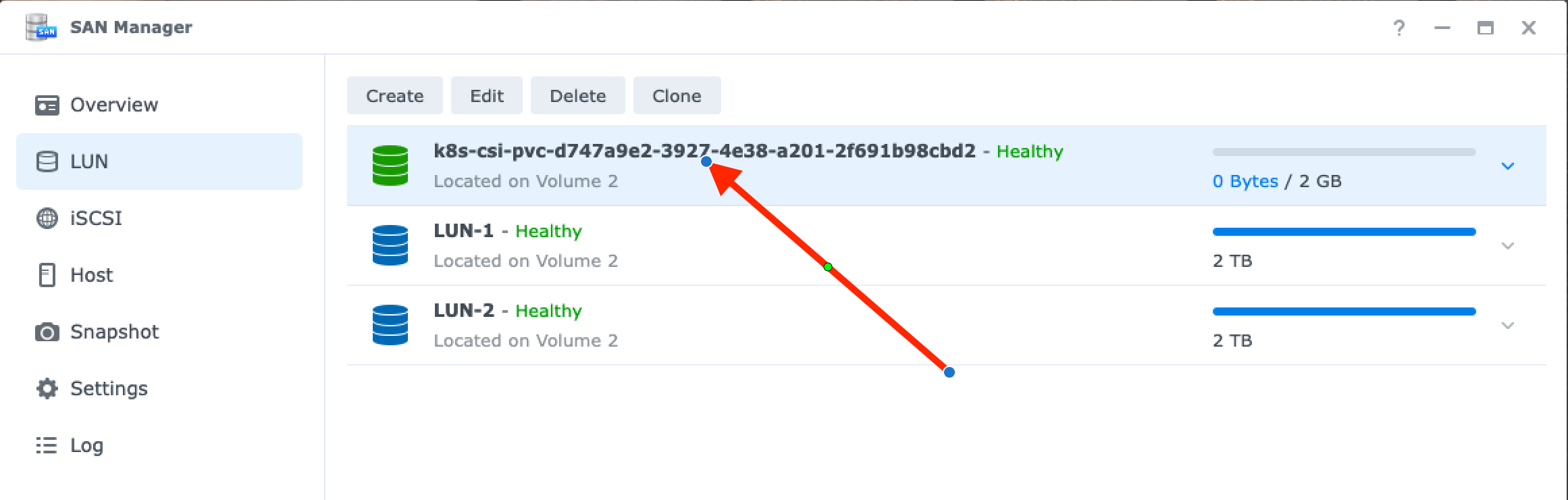

my-file-storage-claim Bound pvc-d747a9e2-3927-4e38-a201-2f691b98cbd2 2Gi RWO synology-iscsi-storage 19s

Look for the STATUS to be “Bound” to know that the new iSCSI volume was created. We can also see this in the Synology UI. Note that the VOLUME name from the oc get pvc command matches the name of the LUN in the Synology UI with “k8s-csi” prepended to the name:

Testing our PVC

In order to test out the newly created Persistent Volume, we will create a Kubernetes job that runs the Unix command dd against the persistent volume. We will use a Red Hat UBI base image to run the command in. Create a file called test-write-job.yml with the following contents:

1---

2apiVersion: batch/v1

3kind: Job

4metadata:

5 name: write

6spec:

7 template:

8 metadata:

9 name: write

10 spec:

11 containers:

12 - name: write

13 image: registry.access.redhat.com/ubi8/ubi-minimal:latest

14 command: ["dd","if=/dev/zero","of=/mnt/pv/test.img","bs=1G","count=1","oflag=dsync"]

15 volumeMounts:

16 - mountPath: "/mnt/pv"

17 name: test-volume

18 volumes:

19 - name: test-volume

20 persistentVolumeClaim:

21 claimName: my-file-storage-claim

22 restartPolicy: NeverApply the above Yaml to your cluster and then validate that the job was created:

$ oc create -f test-write-job.yml

$ oc get jobs

job.batch/write created

$ oc get jobs

NAME COMPLETIONS DURATION AGE

write 0/1 1s 1s

Using the oc get jobs command wait until the COMPLETIONS shows 1/1 before continuing. This signifies that the job has completed. With the job complete we can now review the logs generated by our test job by running oc logs job/write:

$ oc logs job/write

1+0 records in

1+0 records out

1073741824 bytes (1.1 GB, 1.0 GiB) copied, 13.013 s, 82.5 MB/s

SUCCESS! If there are errors about permission issues be sure that you followed the instructions on Defining the Synology Storage Class and then re-run your job.

Cleanup

With our new Synology CSI driver tested, we can clean up our test PVC and job using the following commands:

$ oc delete job/write

job.batch "write" deleted

$ oc delete pvc/my-file-storage-claim

If you set the reclaimPolicy to Delete in the section Defining the Synology Storage Class the Persistent Volume will be automatically deleted. You can check in the Synology UI to validate that the LUN has been destroyed.

Conclusion

If you are looking to expand the types of workloads you have in your OpenShift cluster and you have a Synology array in your data center, using the Synology CSI driver to dynamically create iSCSI LUNS is a quick and flexible storage provider to help meet this need.